最近想学一下 k8s , 先把环境搭起来,后面再慢慢琢磨怎么玩

# 环境介绍

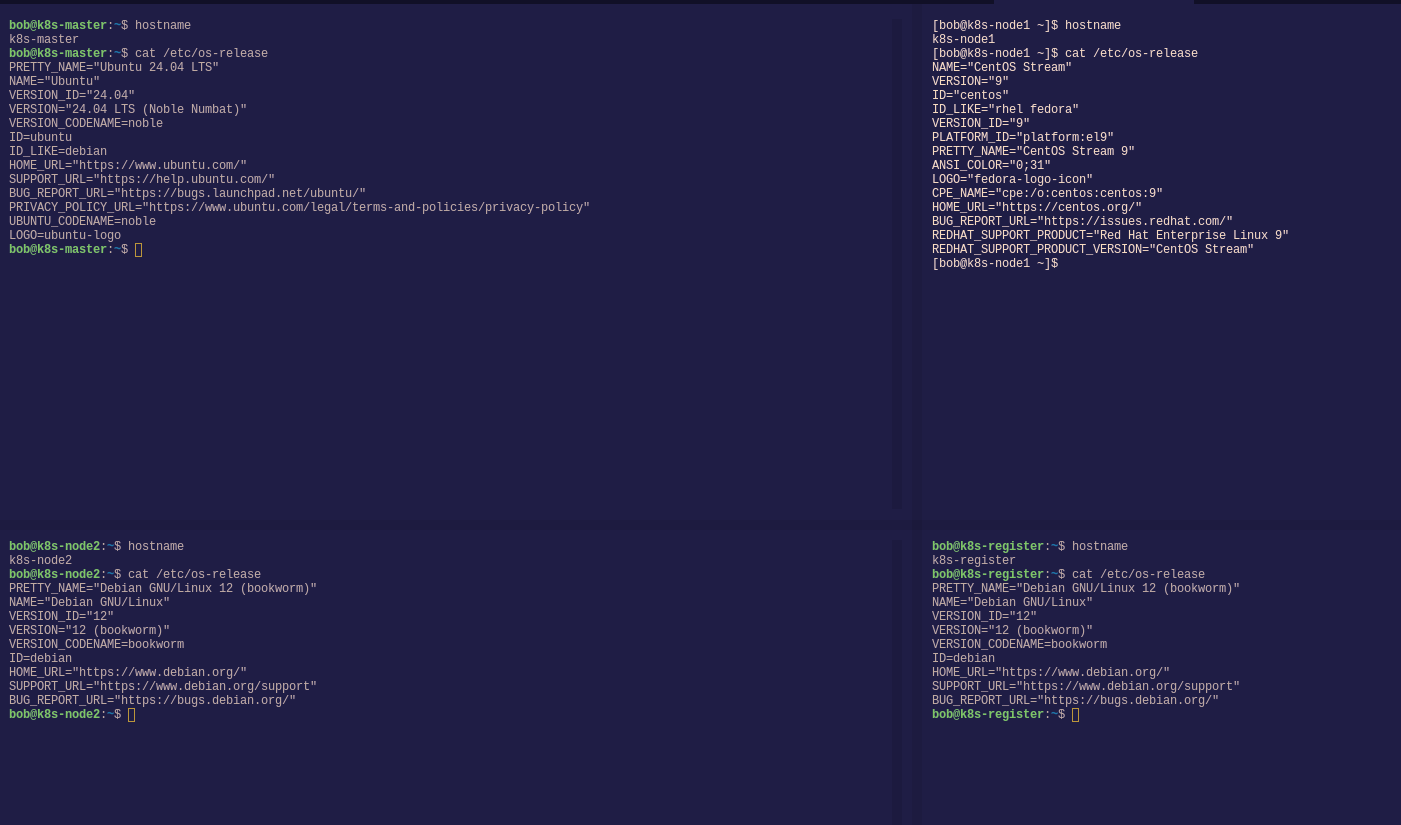

| 操作系统 | 计算机名 | 域名 | IP 地址 | 安装服务 | 备注 |

|---|---|---|---|---|---|

| ubuntu 24.04 | k8s-master | keeponline.cn | 192.168.80.150 | containerd、kubeadm、kubectl、kubelet、Flannel | k8s 控制面板节点 |

| centos 9 | k8s-node1 | keeponline.cn | 192.168.80.151 | containerd、kubeadm、kubectl、kubelet | k8s 工作节点 1 |

| debian 12 | k8s-node2 | keeponline.cn | 192.168.80.152 | containerd、kubeadm、kubectl、kubelet | k8s 工作节点 2 |

| debian 12 | k8s-registry | keeponline.cn | 192.168.80.153 | docker-ce、harbor | 本地镜像仓库服务器 |

如果有自己的公网域名,请在云服务器上做对应主机的解析记录,并申请 k8s-registry 域名的证书

没有的话,请在各服务器本地 hosts 文件手动添加对应主机的解析记录

# 部署前配置

在部署 k8s 服务之前,我们需要对 k8s-master 、 k8s-node1 和 k8s-nodee2 作一些部署前的配置和 containerd 服务以及 kubeadm 部署工具的安装,由于我的环境三台服务器都属于不同的 linux发行版 ,除了 kubeadm 的部署命令有些区别之外,其它的命令基本上都是通用的

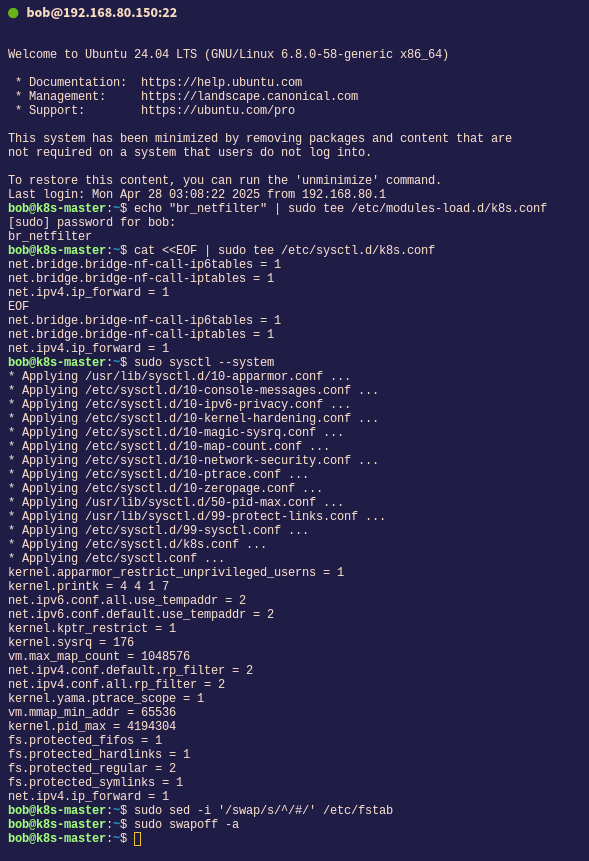

- 启用网络数据包转发,否则部分 CNI 组件无法正常工作

# 加载 br_netfilter 内核模块 | |

echo "br_netfilter" | sudo tee /etc/modules-load.d/k8s.conf | |

# 配置 sysctl 内核参数 | |

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf | |

net.bridge.bridge-nf-call-ip6tables = 1 | |

net.bridge.bridge-nf-call-iptables = 1 | |

net.ipv4.ip_forward = 1 | |

EOF | |

# 应用 sysctl 配置 | |

sudo sysctl --system |

- 禁用

swap分区,否则kubelet无法正常工作

sudo sed -i '/swap/s/^/#/' /etc/fstab | |

sudo swapoff -a |

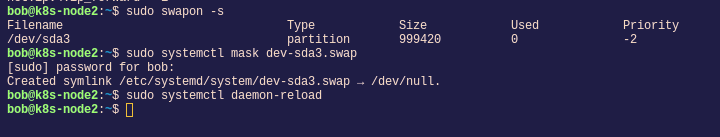

经测试发现 Debian12 通过注释 fstab 文件重启后依然无法禁用 swap 分区,需要额外操作

# 查看当前挂载的 swap 分区 | |

sudo swapon -s | |

#通过 systemd 禁用 swap 分区 | |

sudo systemctl mask dev-sda3.swap # 替换为你的 swap 设备名 | |

sudo systemctl daemon-reload |

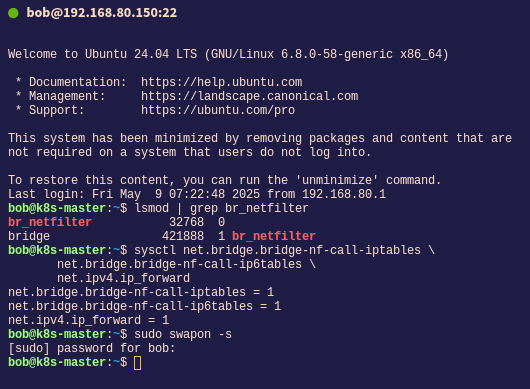

- 验证配置是否生效

# 检查 br_netfilter 模块是否加载 | |

lsmod | grep br_netfilter | |

# 检查 sysctl 参数值 | |

sudo sysctl net.bridge.bridge-nf-call-iptables \ | |

net.bridge.bridge-nf-call-ip6tables \ | |

net.ipv4.ip_forward | |

# 预期输出: | |

net.bridge.bridge-nf-call-iptables = 1 | |

net.bridge.bridge-nf-call-ip6tables = 1 | |

net.ipv4.ip_forward = 1 | |

# 查看 swap 挂载分区 | |

sudo swapon -s # 正常情况下应该没有任何输出 |

# 部署 containerd

containerd 是目前 k8s 官方所推荐的容器运行时,当然你也可以使用 Docker ,但不在本文章中讨论

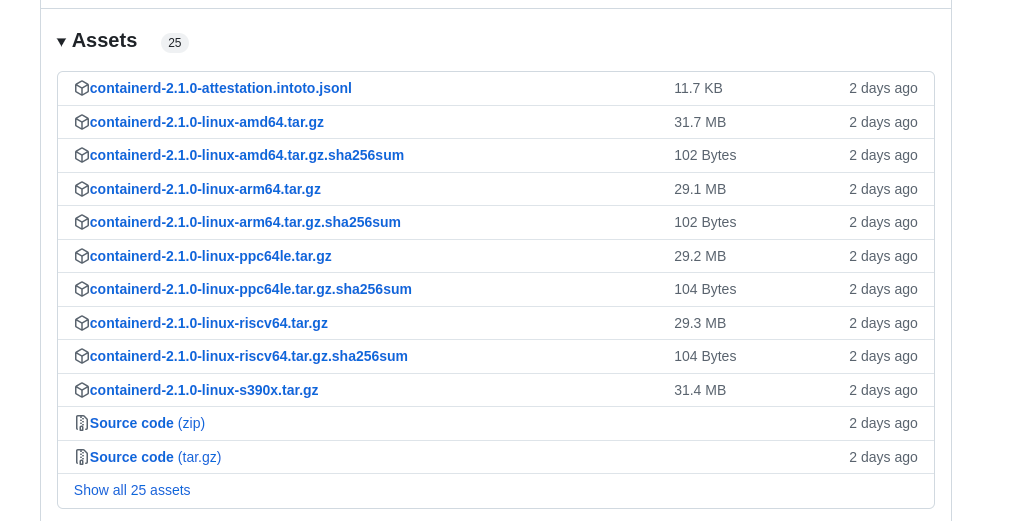

- 安装 containerd

从 https://github.com/containerd/containerd/releases 下载最新版本的 containerd 二进制文件

- 解压文件到 /usr/local

sudo tar Cxzvf /usr/local containerd-2.1.0-rc.1-linux-amd64.tar.gz

- 创建 systemd 服务

# 创建 system 目录 | |

sudo mkdir -p /usr/local/lib/systemd/system | |

# 创建 containerd 服务 | |

cat << EOF |sudo tee /usr/local/lib/systemd/system/containerd.service | |

[Unit] | |

Description=containerd container runtime | |

Documentation=https://containerd.io | |

After=network.target dbus.service | |

[Service] | |

ExecStartPre=-/sbin/modprobe overlay | |

ExecStart=/usr/local/bin/containerd | |

Type=notify | |

Delegate=yes | |

KillMode=process | |

Restart=always | |

RestartSec=5 | |

LimitNPROC=infinity | |

LimitCORE=infinity | |

TasksMax=infinity | |

OOMScoreAdjust=-999 | |

[Install] | |

WantedBy=multi-user.target | |

EOF | |

# 设置开机自启动 | |

sudo systemctl daemon-reload | |

sudo systemctl enable --now containerd |

- 生成 containerd 配置文件

# 创建 containerd 配置目录 | |

sudo mkdir /etc/containerd | |

# 生成 containerd 配置文件 | |

containerd config default |sudo tee /etc/containerd/config.toml | |

# 更改 puase 镜像的仓库地址为本地镜像仓库,将 “k8s-registry.keeponline.cn/k8s” 替换成你的仓库地址 | |

sudo sed -i 's|registry.k8s.io|k8s-registry.keeponline.cn/k8s|g' /etc/containerd/config.toml | |

# 重启 containerd 服务 | |

sudo systemctl restart containerd |

除了 containerd 之外,我们还需要安装 runc 和 CNI插件 ,这两个程序的安装比较简单,就不多说了

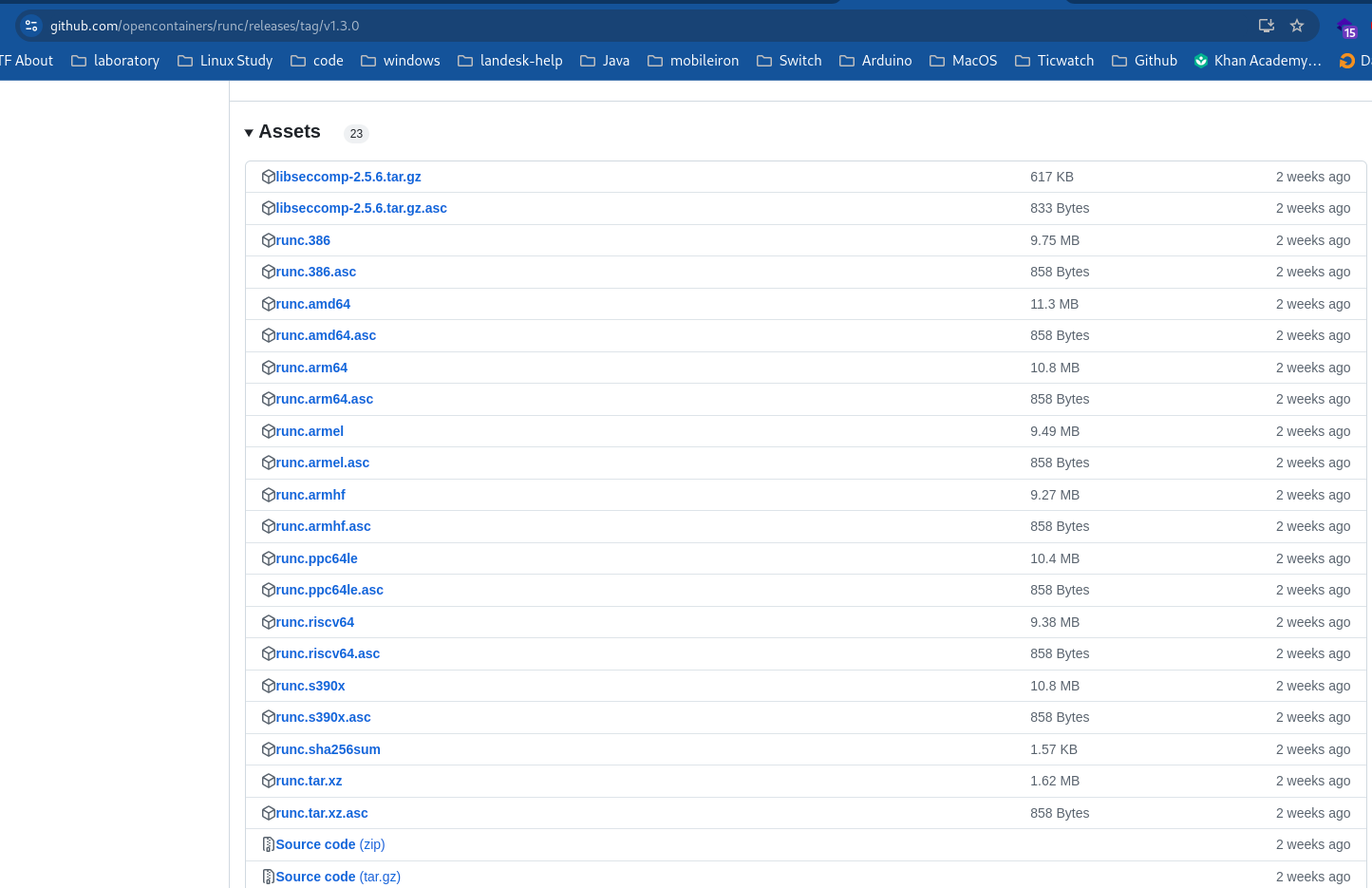

# 部署 runc

- 下载 runc

从 https://github.com/opencontainers/runc/releases 下载最新版本的二进制文件

- 安装 runc

sudo install -m 755 runc.amd64 /usr/local/sbin/runc

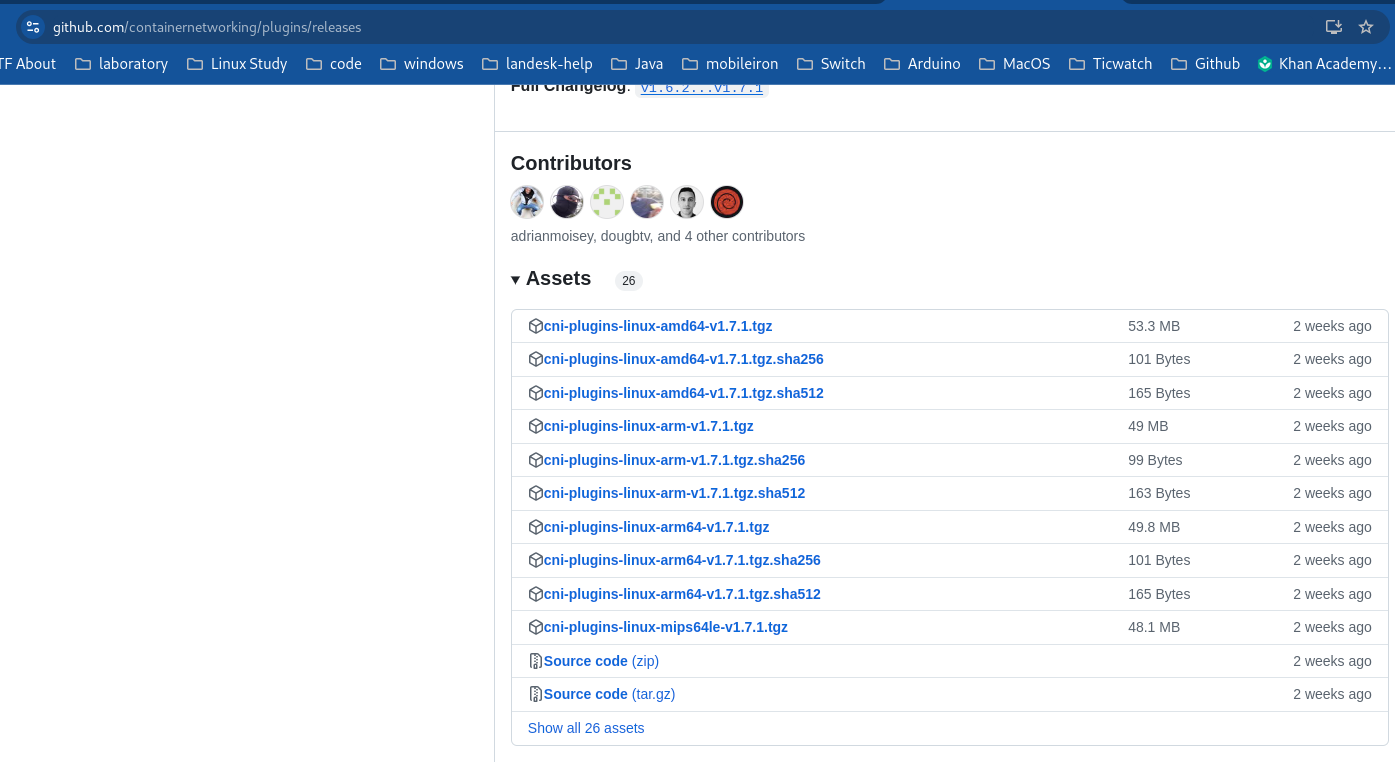

# 部署 CNI 组件

- 下载 CNI 二进制文件

从 https://github.com/containernetworking/plugins/releases 下载对应系统架构的二进制文件

- 创建 CNI 目录

sudo mkdir -p /opt/cni/bin

- 解压文件到 CNI 目录

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.7.1.tgz

到此,containerd 相关的配置便完成了

# 部署 kubeadm

k8s 的部署方式五花八门,看得我有点眼花缭乱,最终选择官方推荐的 kubeadm 工具部署

Ubuntu/Debian: 安装方式:

# 1. 更新 apt 包索引并安装使用 Kubernetes apt 仓库所需要的包 | |

sudo apt update | |

sudo apt-get install -y apt-transport-https ca-certificates curl gpg | |

# 2. 下载用于 Kubernetes 软件包仓库的公共签名密钥,在低于 Debian 12 和 Ubuntu 22.04 的发行版本中,/etc/apt/keyrings 默认不存在。 应在 curl 命令之前创建它。 | |

# sudo mkdir -p -m 755 /etc/apt/keyrings | |

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.33/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg | |

# 3. 添加 Kubernetes apt 仓库 | |

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.33/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list | |

# 4. 更新 apt 包索引,安装 kubelet、kubeadm 和 kubectl,并锁定其版本 | |

sudo apt update | |

sudo apt install -y kubelet kubeadm kubectl | |

sudo apt-mark hold kubelet kubeadm kubectl |

CentOS/RedHat: 安装方式:

# 1. 禁用 SELinux | |

sudo setenforce 0 | |

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config | |

# 2. 添加 Kubernetes 的 yum 仓库 | |

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo | |

[kubernetes] | |

name=Kubernetes | |

baseurl=https://pkgs.k8s.io/core:/stable:/v1.33/rpm/ | |

enabled=1 | |

gpgcheck=1 | |

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.33/rpm/repodata/repomd.xml.key | |

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni | |

EOF | |

# 3. 安装 kubelet、kubeadm 和 kubectl,并启用 kubelet 开机自启动 | |

sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes | |

sudo systemctl enable --now kubelet |

到此,k8s 相关的部署工具便安装完成了

# 本地镜像仓库

为了顺利部署 k8s 集群服务,我们先在 k8s-registry 这台服务器上安装 docker-ce 和 harbor 镜像仓库服务

因为众所周知的原因,目前国内的网络无法正常访问 k8s 的镜像仓库,所以我们需要借助第三方的镜像仓库地址获取 k8s 所需要的镜像,并通过 docker 推送到本地仓库,这样,我们的 k8s 集群节点便可以通过本地仓库获取相对应的镜像文件了

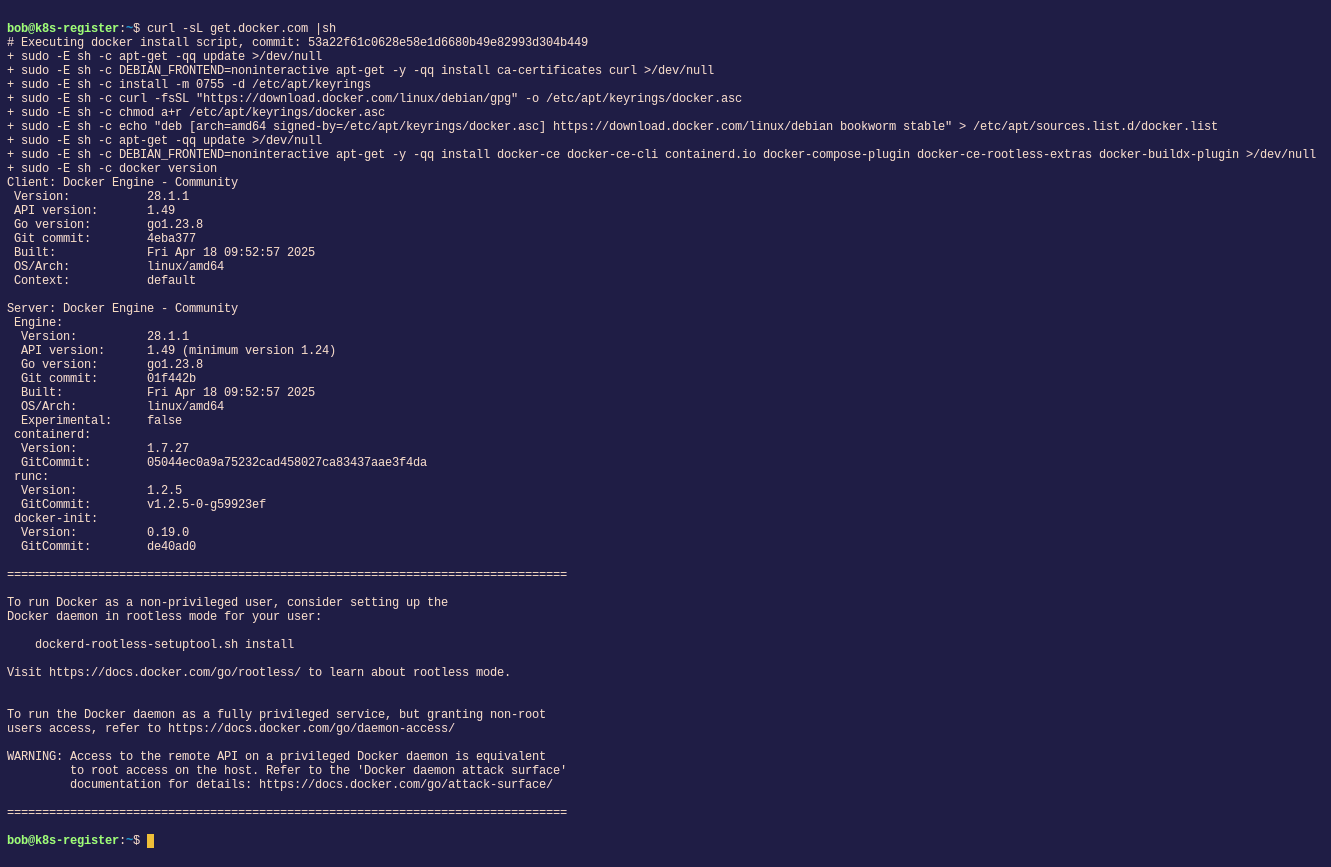

# 部署 docker

安装 docker-ce 服务,可以统一使用官方脚本安装

curl -sL get.docker.com |sh

ubuntu 和 debian 安装后会自动启动 docker 服务并自动添加开机自启动, centos 需要手动启动 docker 服务

sudo systemctl start docker && sudo systemctl enable docker

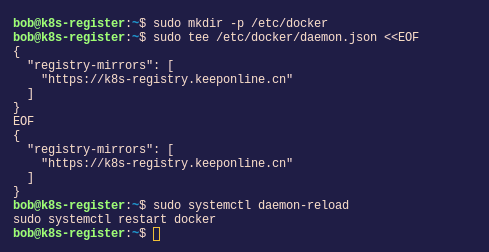

安装完 docker 之后,我们可以将 docker 镜像仓库配置成我们本地自建的仓库地址 (可选)

# 创建 docker 配置目录 | |

sudo mkdir -p /etc/docker | |

# 配置 docker 镜像源 | |

sudo tee /etc/docker/daemon.json <<EOF | |

{ | |

"registry-mirrors": [ | |

"https://k8s-registry.keeponline.cn" | |

] | |

} | |

EOF | |

# 重新加载 docker 服务 | |

sudo systemctl daemon-reload | |

sudo systemctl restart docker |

如果你不打算给仓库配置 ssl 证书的话,则配置 insecure-registries 指定允许访问 http 不安全站点

# 创建 docker 配置目录 | |

sudo mkdir -p /etc/docker | |

# 配置 docker http 镜像源 | |

sudo tee /etc/docker/daemon.json <<EOF | |

{ | |

"insecure-registries" : ["192.168.80.153", "k8s-registry.keeponline.cn"] | |

] | |

} | |

EOF | |

# 重新加载 docker 服务 | |

sudo systemctl daemon-reload | |

sudo systemctl restart docker |

普通用户是没有权限使用 docker 的命令,为了方便使用,我们可以把普通用户添加到 docker 组中

# 添加用户 bob 到 docker 组 | |

sudo usermod -aG docker bob | |

# 初始化当前用户登陆环境 | |

newgrp docker | |

# 查看当前用户身份信息,确认是否拥有 docker 组权限 | |

id | |

# 预期输出: | |

uid=1000(bob) gid=996(docker) groups=996(docker),24(cdrom),25(floppy),29(audio),30(dip),44(video),46(plugdev),100(users),106(netdev),1000(bob) | |

# 查看 docker 版本,如果权限生效会显示 Server: Docker Engine 的信息,如果仅显示 CLient 信息则表示权限未生效 | |

docker version | |

# 预期输出: | |

Client: Docker Engine - Community | |

Version: 28.1.1 | |

API version: 1.49 | |

Go version: go1.23.8 | |

Git commit: 4eba377 | |

Built: Fri Apr 18 09:52:57 2025 | |

OS/Arch: linux/amd64 | |

Context: default | |

Server: Docker Engine - Community | |

Engine: | |

Version: 28.1.1 | |

API version: 1.49 (minimum version 1.24) | |

Go version: go1.23.8 | |

Git commit: 01f442b | |

Built: Fri Apr 18 09:52:57 2025 | |

OS/Arch: linux/amd64 | |

Experimental: false | |

containerd: | |

Version: 1.7.27 | |

GitCommit: 05044ec0a9a75232cad458027ca83437aae3f4da | |

runc: | |

Version: 1.2.5 | |

GitCommit: v1.2.5-0-g59923ef | |

docker-init: | |

Version: 0.19.0 | |

GitCommit: de40ad0 |

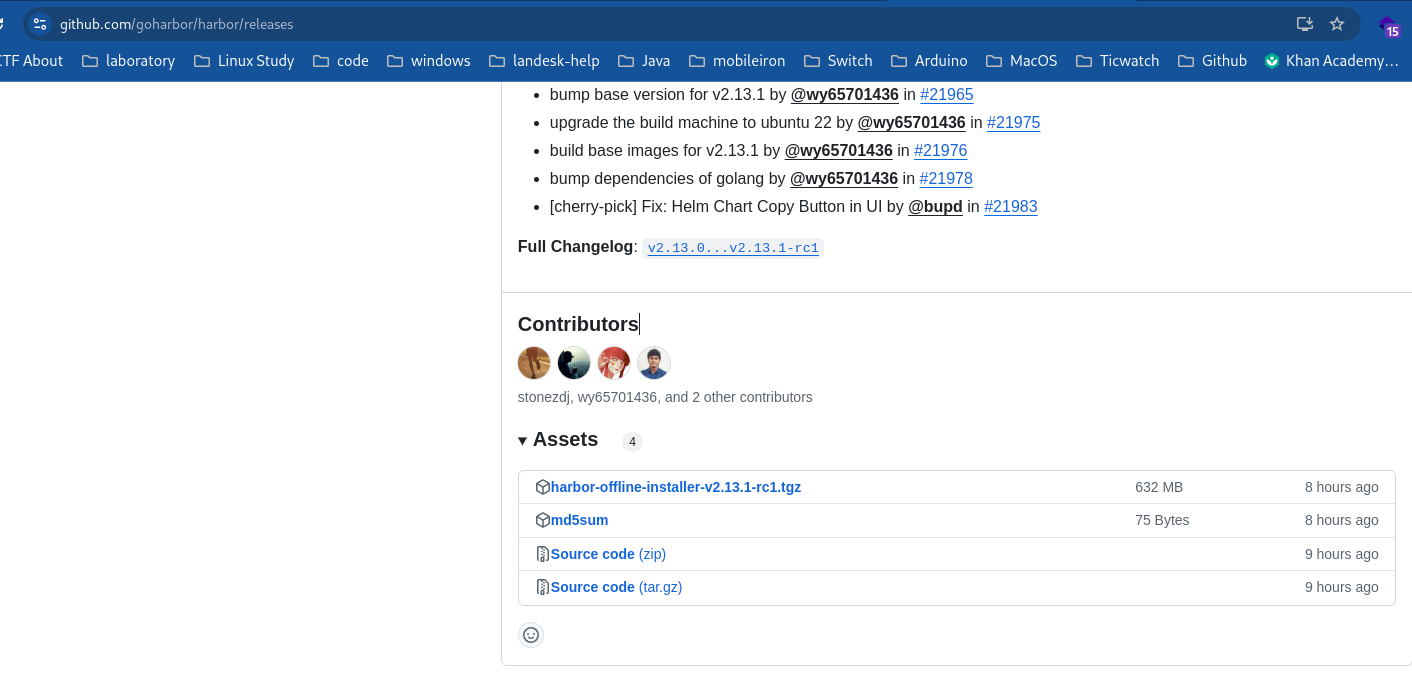

# 部署 harbor

- 下载 harbor 离线安装包

打开浏览器,访问 https://github.com/goharbor/harbor/releases 下载最新版本的 harbor 离线安装包

- 解压文件

tar zxvf harbor-offline-installer-v2.13.1-rc1.tgz

- 安装 harbor

切换到 harbor 根目录复制一份 harbor.yml 配置文件

cd harbor && cp harbor.yml.tmpl harbor.yml

- 更改 harbor.yml 配置信息,请查看注释信息,没有注释的地方可以保持默认

hostname: k8s-registry.keeponline.cn # 仓库地址名称 | |

http: | |

port: 80 | |

https: # 如果没有申请 ssl 证书,请把 https 相关配置注释掉 | |

port: 443 | |

certificate: /home/bob/harbor/cert/keeponline.cn.pem #证书路径 | |

private_key: /home/bob/harbor/cert/keeponline.cn.key #证书路径 | |

harbor_admin_password: Harbor12345 # 仓库管理员默认登陆密码 | |

database: | |

password: root123 # 数据库默认密码 | |

max_idle_conns: 100 | |

max_open_conns: 900 | |

conn_max_lifetime: 5m | |

conn_max_idle_time: 0 | |

data_volume: /data | |

trivy: | |

ignore_unfixed: false | |

skip_update: false | |

skip_java_db_update: false | |

offline_scan: false | |

security_check: vuln | |

insecure: false | |

timeout: 5m0s | |

jobservice: | |

max_job_workers: 10 | |

max_job_duration_hours: 24 | |

job_loggers: | |

- STD_OUTPUT | |

- FILE | |

notification: | |

webhook_job_max_retry: 3 | |

log: | |

level: info | |

local: | |

rotate_count: 50 | |

rotate_size: 200M | |

location: /var/log/harbor | |

_version: 2.13.0 | |

proxy: | |

http_proxy: | |

https_proxy: | |

no_proxy: | |

components: | |

- core | |

- jobservice | |

- trivy | |

upload_purging: | |

enabled: true | |

age: 168h | |

interval: 24h | |

dryrun: false | |

cache: | |

enabled: false | |

expire_hours: 24 |

- 安装 harbor

配置完后,可直接执行目录下 install.sh 脚本进行安装 (需要 root 权限执行)

sudo ./install.sh

# 预期输出: | |

[Step 5]: starting Harbor ... | |

[+] Running 10/10 | |

✔ Network harbor_harbor Created 0.0s | |

✔ Container harbor-log Started 0.3s | |

✔ Container harbor-portal Started 1.3s | |

✔ Container harbor-db Started 1.2s | |

✔ Container registry Started 0.7s | |

✔ Container redis Started 1.5s | |

✔ Container registryctl Started 0.8s | |

✔ Container harbor-core Started 2.0s | |

✔ Container harbor-jobservice Started 2.3s | |

✔ Container nginx Started 2.5s | |

✔ ----Harbor has been installed and started successfully.---- |

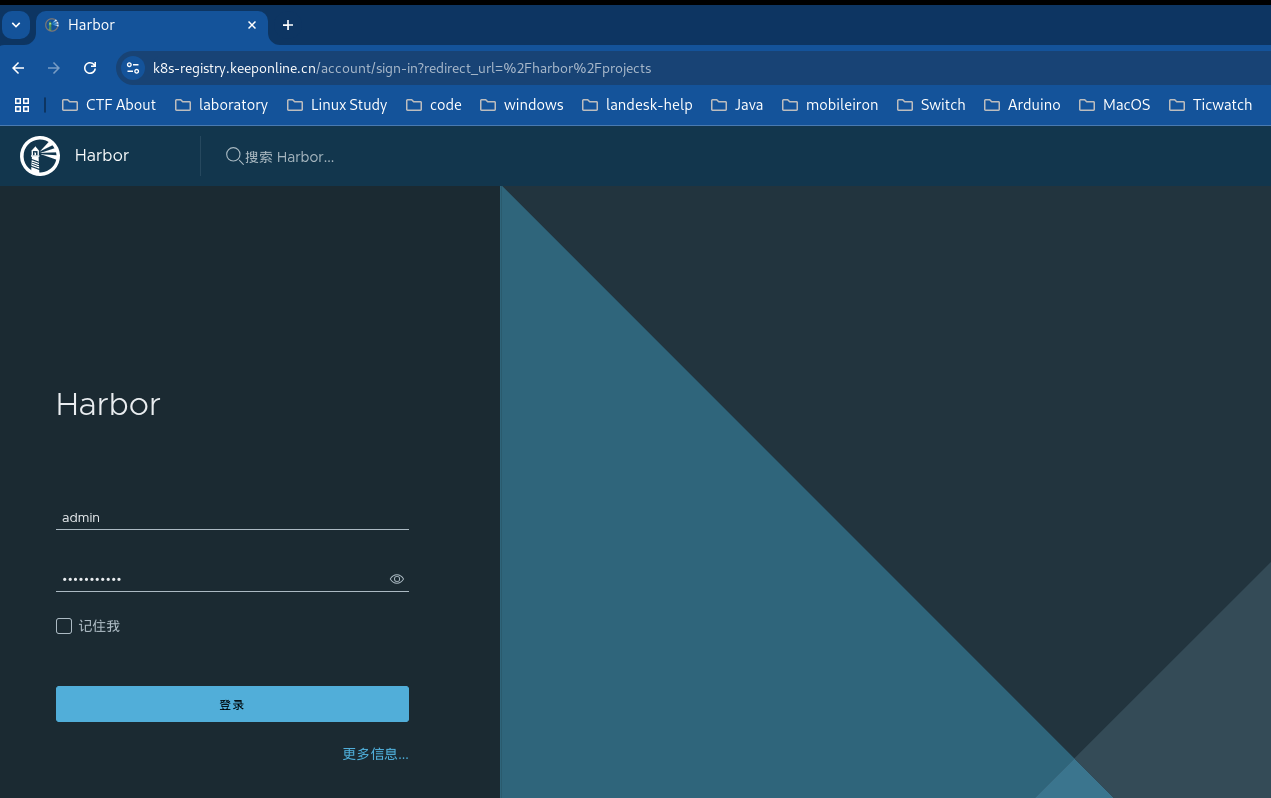

- 验证 harbor 是否能够正常访问

通过浏览器访问仓库地址

测试访问 https 正常,证书正常

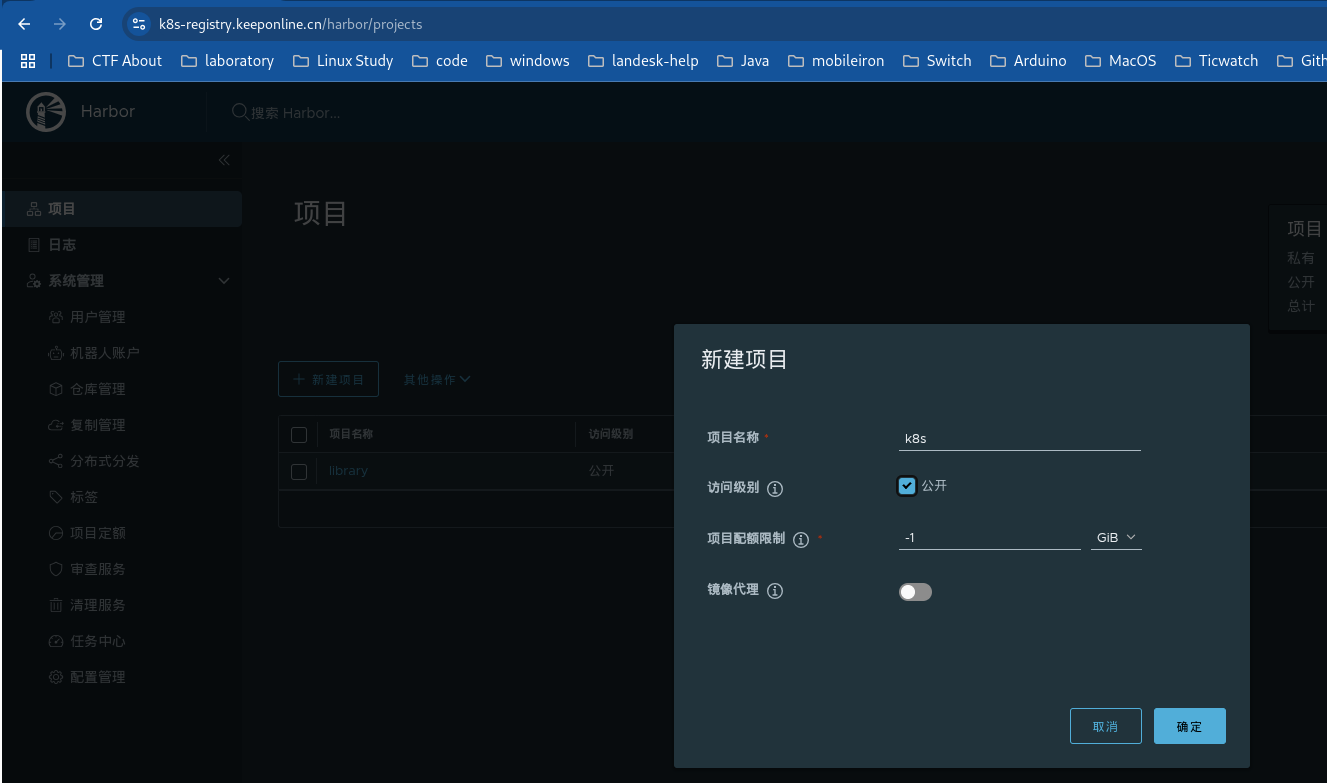

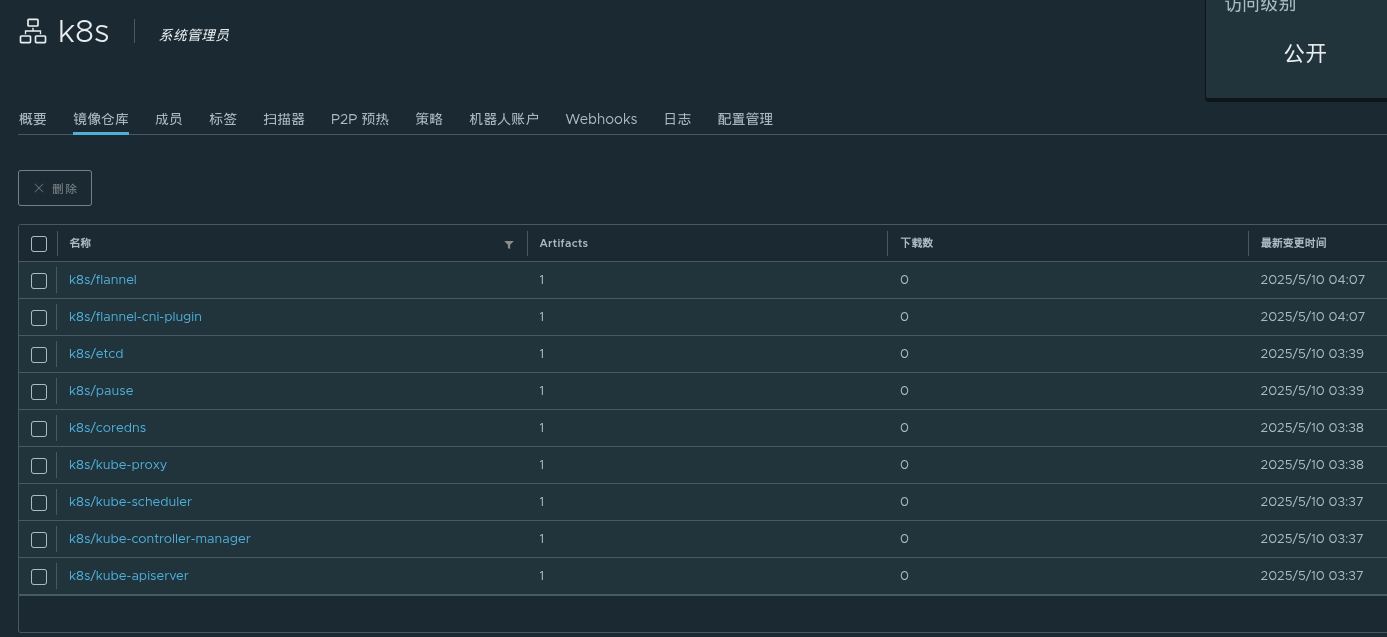

- 创建 k8s 项目

登陆 harbor ,在 项目 视图中点击 新建项目,创建一个 公开 的 k8s 项目

后续通过 k8s-registry.keeponline.cn/k8s 这个地址便可以推送和拉取该项目的镜像文件

- 创建 systemd 服务(可选)

创建一个 systemd 服务方便后续的服务启动管理

sudo vi /etc/systemd/system/harbor.service

[Unit] | |

Description=Harbor Container Registry | |

After=docker.service network.target | |

Requires=docker.service | |

[Service] | |

Type=oneshot | |

RemainAfterExit=yes | |

WorkingDirectory=/home/bob/harbor # 填写你的 harbor 项目路径 | |

ExecStart=/usr/bin/docker compose -f /home/bob/harbor/docker-compose.yml up -d # docker-compose.yml 配置文件路径默认在 harbor 根目录 | |

ExecStop=/usr/bin/docker compose -f /home/bob/harbor/docker-compose.yml down # 填写你的 docker-compose.yml 配置文件路径 | |

TimeoutStartSec=0 | |

[Install] | |

WantedBy=multi-user.target |

设置 harbor 开机自启动

sudo systemctl enable harbor

# 拉取和推送 k8s 相关镜像

拉取 k8s 相关镜像我们需要借助第三方的镜像网站

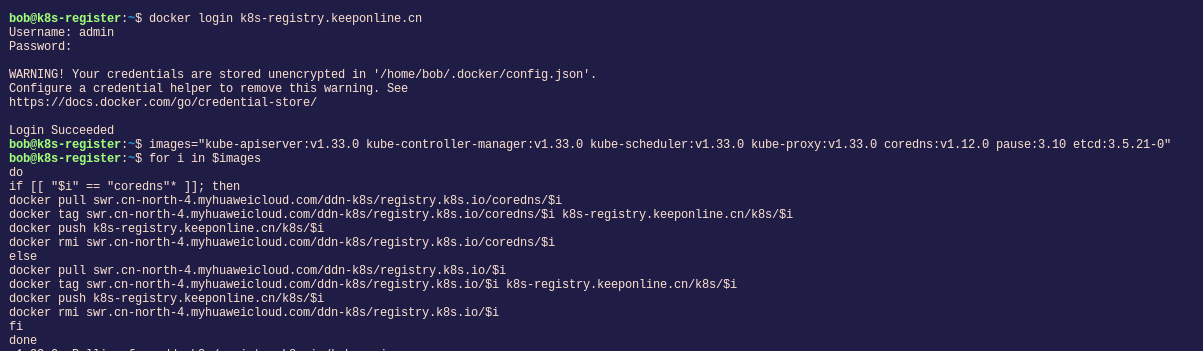

- docker 登陆本地 harbor 仓库

docker login k8s-registry.keeponline.cn

bob@k8s-register:~$ docker login k8s-registry.keeponline.cn | |

Username: admin | |

Password: | |

WARNING! Your credentials are stored unencrypted in '/home/bob/.docker/config.json'. | |

Configure a credential helper to remove this warning. See | |

https://docs.docker.com/go/credential-store/ | |

Login Succeeded |

- 拉取并上传 k8s 相关镜像

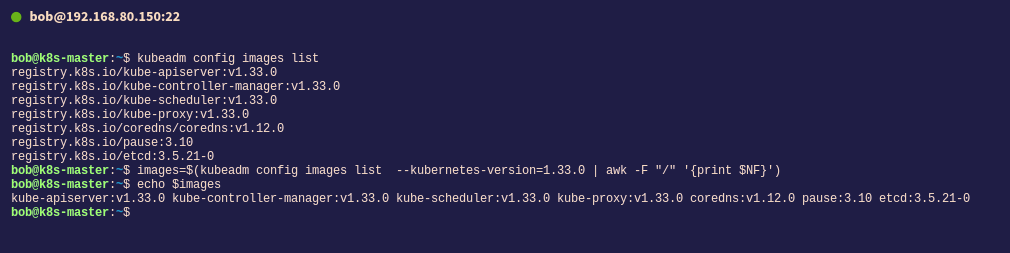

在已经安装 kubeadm 的服务器上执行 kubeadm config images list 可以获取当前 k8s 版本所需要镜像文件和版本信息

得知需要的镜像文件和版本信息后,我们便可以在 153 这台服务器上用 docker 拉取和推送对应的镜像文件

# 创建一个镜像变量 | |

images="kube-apiserver:v1.33.0 kube-controller-manager:v1.33.0 kube-scheduler:v1.33.0 kube-proxy:v1.33.0 coredns:v1.12.0 pause:3.10 etcd:3.5.21-0" | |

# 拉取并推送镜像到本地仓库,请将 “k8s-registry.keeponline.cn/k8s” 换成你的仓库项目地址 | |

for i in $images | |

do | |

if [[ "$i" == "coredns"* ]]; then | |

docker pull swr.cn-north-4.myhuaweicloud.com/ddn-k8s/registry.k8s.io/coredns/$i | |

docker tag swr.cn-north-4.myhuaweicloud.com/ddn-k8s/registry.k8s.io/coredns/$i k8s-registry.keeponline.cn/k8s/$i | |

docker push k8s-registry.keeponline.cn/k8s/$i | |

docker rmi swr.cn-north-4.myhuaweicloud.com/ddn-k8s/registry.k8s.io/coredns/$i | |

else | |

docker pull swr.cn-north-4.myhuaweicloud.com/ddn-k8s/registry.k8s.io/$i | |

docker tag swr.cn-north-4.myhuaweicloud.com/ddn-k8s/registry.k8s.io/$i k8s-registry.keeponline.cn/k8s/$i | |

docker push k8s-registry.keeponline.cn/k8s/$i | |

docker rmi swr.cn-north-4.myhuaweicloud.com/ddn-k8s/registry.k8s.io/$i | |

fi | |

done |

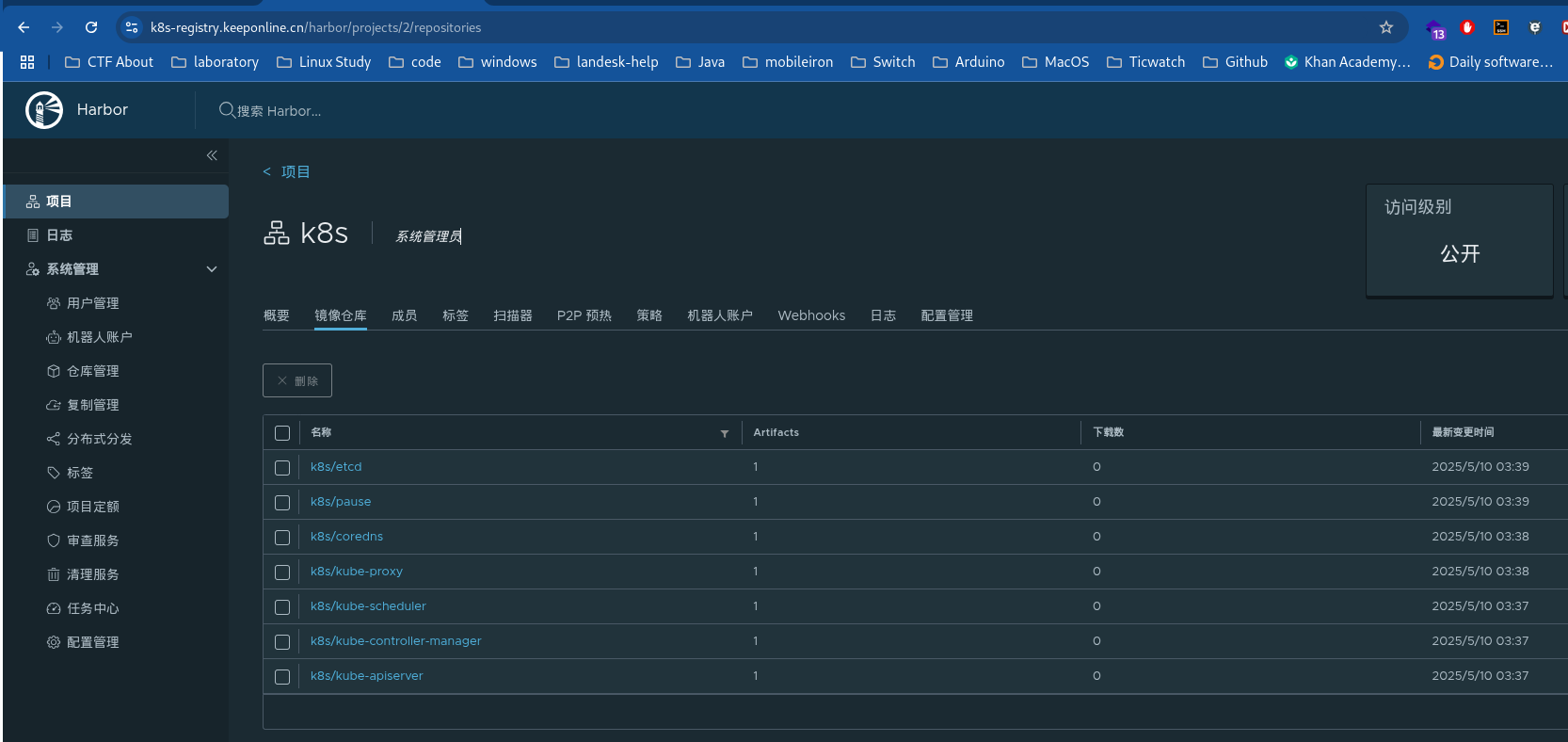

- 命令执行结束后,登陆 harbor 查看镜像是否成功推送

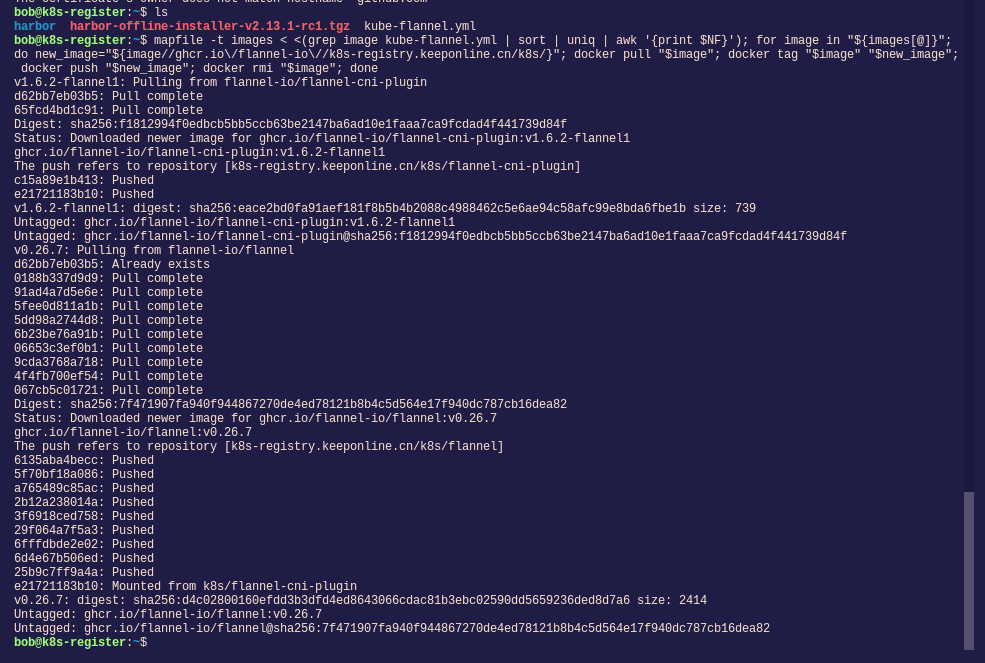

- 拉取 flannel 镜像

本次 k8s 环境中的 pod 网络组件我选择的 flannel ,如果你选择用其它的网络组件,可以略过该步骤

flannel 项目地址:https://github.com/flannel-io/flannel#deploying-flannel-manually

# 下载 flannel 配置文件 | |

wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml | |

# 获取 flannel 镜像并推送到本地仓库 | |

mapfile -t images < <(grep image kube-flannel.yml | sort | uniq | awk '{print $NF}'); for image in "${images[@]}"; do new_image="${image//ghcr.io\/flannel-io\//k8s-registry.keeponline.cn/k8s/}"; docker pull "$image"; docker tag "$image" "$new_image"; docker push "$new_image"; docker rmi "$image"; done |

到此,k8s 集群所需的镜像就全部准备完毕了

# k8s 集群部署

所有的预备工作都完毕,接下来就可以愉快的部署 k8s 集群了 ^_^

# master 节点部署

k8s-master 这台服务器是本次 k8s 集群环境的控制面板节点

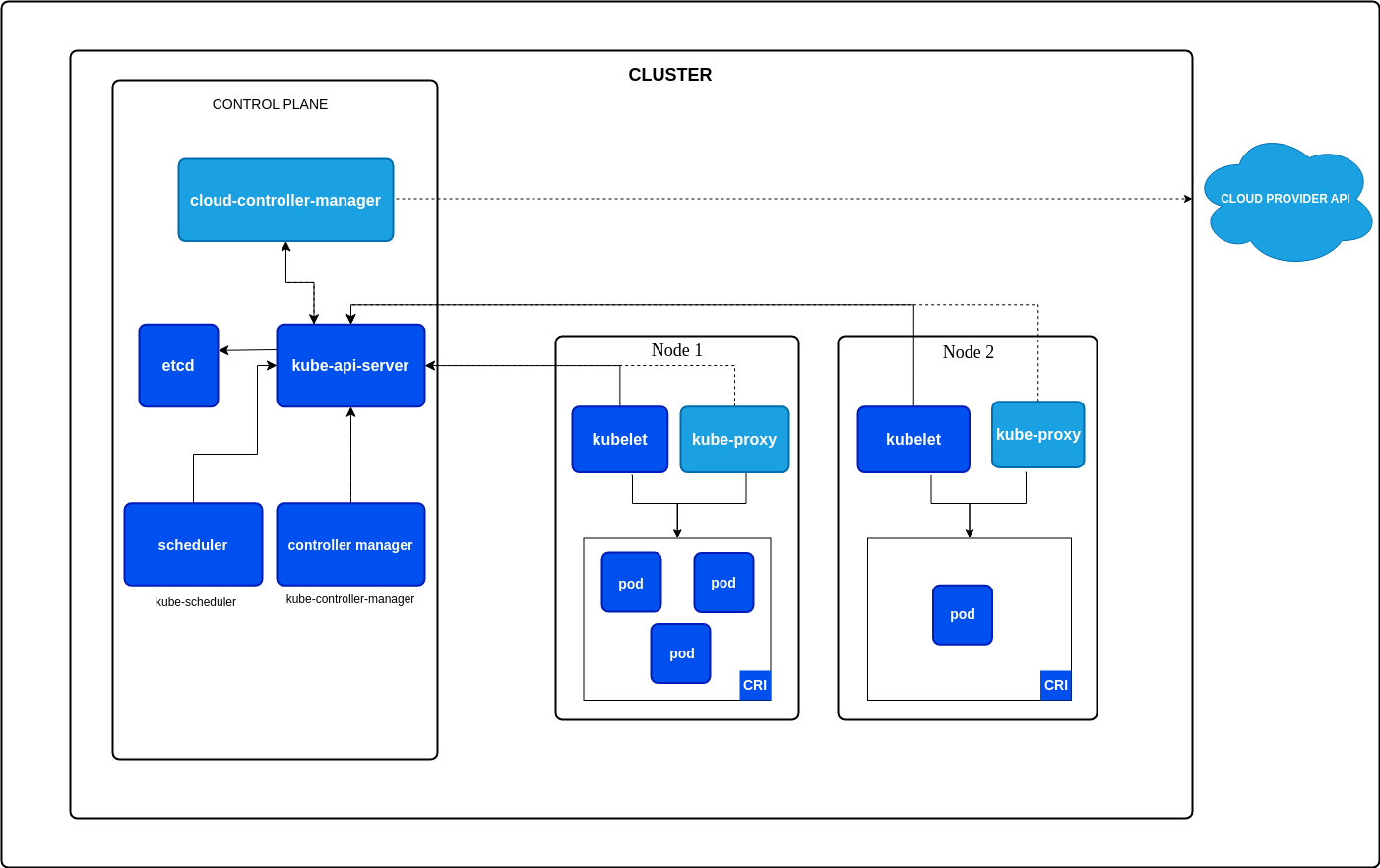

其主要作用如下:

-

初始化集群,生成集群的全局唯一配置(如证书、kubeconfig 文件等)。

-

部署控制平面组件(kube-apiserver、kube-scheduler、kube-controller-manager、etcd)

-

生成供其他节点加入集群的 join token 和命令

# 初始化集群

- 使用

kubeadm创建初始化配置文件

kubeadm config print init-defaults > kubeadm-init.yaml

- 更改

kubeadm-init.yaml配置信息,更改注释的地址

apiVersion: kubeadm.k8s.io/v1beta4 | |

bootstrapTokens: | |

- groups: | |

- system:bootstrappers:kubeadm:default-node-token | |

token: abcdef.0123456789abcdef | |

ttl: 24h0m0s | |

usages: | |

- signing | |

- authentication | |

kind: InitConfiguration | |

localAPIEndpoint: | |

advertiseAddress: 192.168.80.150 #API 服务地址,默认 master 地址 | |

bindPort: 6443 | |

nodeRegistration: | |

criSocket: unix:///var/run/containerd/containerd.sock | |

imagePullPolicy: IfNotPresent | |

imagePullSerial: true | |

name: k8s-master # master 主机名 | |

taints: null | |

timeouts: | |

controlPlaneComponentHealthCheck: 4m0s | |

discovery: 5m0s | |

etcdAPICall: 2m0s | |

kubeletHealthCheck: 4m0s | |

kubernetesAPICall: 1m0s | |

tlsBootstrap: 5m0s | |

upgradeManifests: 5m0s | |

--- | |

apiServer: {} | |

apiVersion: kubeadm.k8s.io/v1beta4 | |

caCertificateValidityPeriod: 87600h0m0s | |

certificateValidityPeriod: 8760h0m0s | |

certificatesDir: /etc/kubernetes/pki | |

clusterName: kubernetes | |

controllerManager: {} | |

dns: {} | |

encryptionAlgorithm: RSA-2048 | |

etcd: | |

local: | |

dataDir: /var/lib/etcd | |

imageRepository: k8s-registry.keeponline.cn/k8s # k8s 获取镜像仓库地址 | |

kind: ClusterConfiguration | |

kubernetesVersion: 1.33.0 # k8s 版本 | |

networking: | |

dnsDomain: cluster.local # 域 | |

serviceSubnet: 10.96.0.0/12 # service 网络 | |

podSubnet: 10.244.0.0/16 # pod 网络 | |

proxy: {} | |

scheduler: {} |

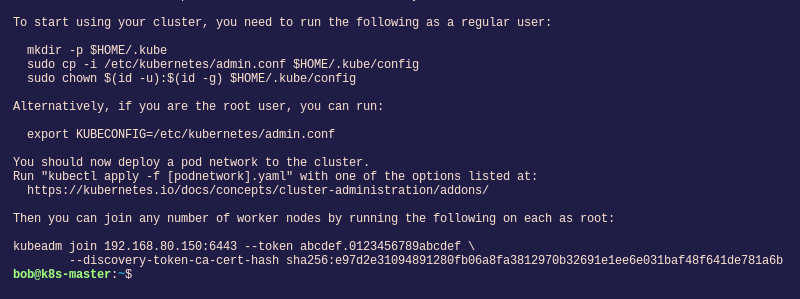

- 初始化集群

sudo kubeadm init --config kubeadm-init.yaml --v=9

看到下面输出则表示集群初始化成功

按照提示, 开始使用集群之前,需要运行以下命令,将 k8s 的配置文件拷贝到家目录,否则无法调用相关命令

mkdir -p $HOME/.kube | |

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config | |

sudo chown $(id -u):$(id -g) $HOME/.kube/config |

还提到了我们应该在集群中部署一个 pod的网络

You should now deploy a pod network to the cluster. | |

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: | |

https://kubernetes.io/docs/concepts/cluster-administration/addons/ |

最后还给出了其它工作节点加入集群的命令

kubeadm join 192.168.80.150:6443 --token abcdef.0123456789abcdef \ | |

--discovery-token-ca-cert-hash sha256:e97d2e31094891280fb06a8fa3812970b32691e1ee6e031baf48f641de781a6b |

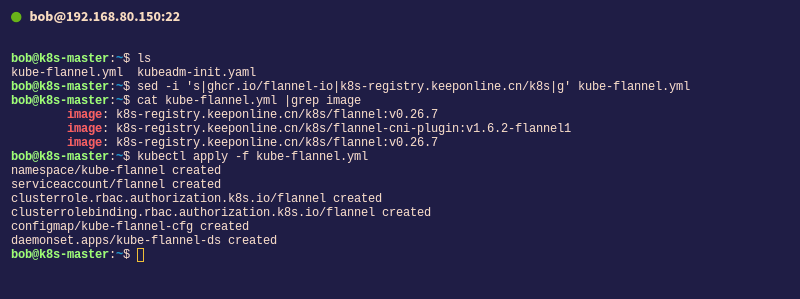

# pod 网络部署

pod 所需要的 flannel 镜像文件我们在前面已经推送到本地仓库了,接下来部署就简单了

- 下载

flannel配置文件

- 修改配置文件中镜像为本地仓库地址

- sed -i 's|ghcr.io/flannel-io|k8s-registry.keeponline.cn/k8s|g' kube-flannel.yml

需要注意的是,flannel 默认网段是 10.244.0.0/16, 如果你的 kubeadm-init.yaml 配置文件中的 podSubnet 不是这个网段,那么你还需要更改 kube-flannel.yml 配置文件中 Network 网段与你的 podSubnet 网段一致

- 部署 flannel

- kubectl apply -f kube-flannel.yml

预期输出:

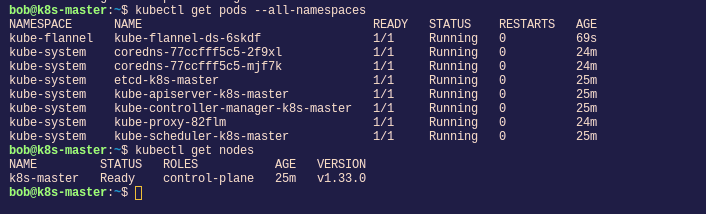

- 验证 pod 和 node 状态是否有异常

- kubectl get pods --all-namespaces # 查看所有的 pod 状态是否 Running

- kubectl get nodes # 查看节点状态是否 Ready, 如果显示 NotReady 表示 pod 网络不正常

预期输出:

到此,master 节点部署完毕

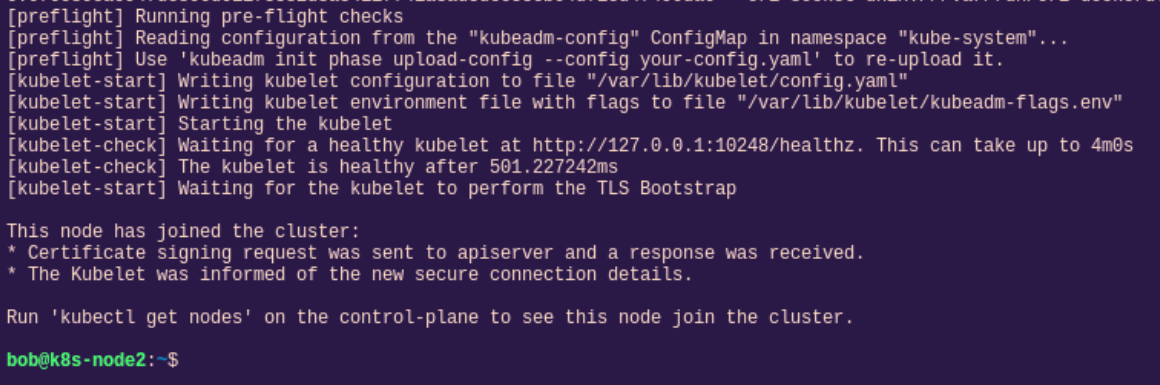

# 加入集群

接下来,登陆 node1 和 node2 把这两台服务器加入到该集群

sudo kubeadm join 192.168.80.150:6443 --token abcdef.0123456789abcdef \ | |

--discovery-token-ca-cert-hash sha256:e97d2e31094891280fb06a8fa3812970b32691e1ee6e031baf48f641de781a6b |

预期输出:

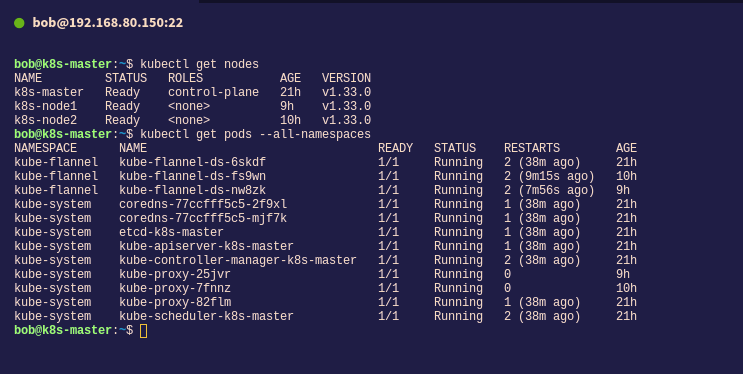

回到

master节点查看nodes和pods状态信息是否正常,正常的话整个k8s集群就部署好了

# 排错

部署中遇到的问题与解决方案

flannel无法运行,查看日志提示Failed to check br_netfilter

bob@k8s-master:~$ kubectl get pods -n kube-flannel | |

NAME READY STATUS RESTARTS AGE | |

kube-flannel-ds-2h8ph 0/1 Init:0/2 0 67s | |

kube-flannel-ds-9fxvg 0/1 CrashLoopBackOff 3 (26s ago) 67s | |

kube-flannel-ds-r6fdw 0/1 Init:0/2 0 67s | |

bob@k8s-master:~$ kubectl logs kube-flannel-ds-9fxvg -n kube-flannel | |

Defaulted container "kube-flannel" out of: kube-flannel, install-cni-plugin (init), install-cni (init) | |

I0507 13:56:41.039019 1 main.go:211] CLI flags config: {etcdEndpoints:http://127.0.0.1:4001,http://127.0.0.1:2379 etcdPrefix:/coreos.com/network etcdKeyfile: etcdCertfile: etcdCAFile: etcdUsername: etcdPassword: version:false kubeSubnetMgr:true kubeApiUrl: kubeAnnotationPrefix:flannel.alpha.coreos.com kubeConfigFile: iface:[] ifaceRegex:[] ipMasq:true ifaceCanReach: subnetFile:/run/flannel/subnet.env publicIP: publicIPv6: subnetLeaseRenewMargin:60 healthzIP:0.0.0.0 healthzPort:0 iptablesResyncSeconds:5 iptablesForwardRules:true netConfPath:/etc/kube-flannel/net-conf.json setNodeNetworkUnavailable:true} | |

W0507 13:56:41.039270 1 client_config.go:618] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work. | |

I0507 13:56:41.057944 1 kube.go:139] Waiting 10m0s for node controller to sync | |

I0507 13:56:41.058022 1 kube.go:469] Starting kube subnet manager | |

I0507 13:56:42.058204 1 kube.go:146] Node controller sync successful | |

I0507 13:56:42.058273 1 main.go:231] Created subnet manager: Kubernetes Subnet Manager - k8s-master | |

I0507 13:56:42.058283 1 main.go:234] Installing signal handlers | |

I0507 13:56:42.058680 1 main.go:468] Found network config - Backend type: vxlan | |

E0507 13:56:42.058789 1 main.go:268] Failed to check br_netfilter: stat /proc/sys/net/bridge/bridge-nf-call-iptables: no such file or directorylsm |

原因分析:

- br_netfilter 内核模块未加载,导致 Flannel 无法配置网络策略

解决方法:

- 配置 net.bridge.bridge-nf-call-iptables 和 net.ipv4.ip_forward,加载 br_netfilter 模块并永久启用

# 加载 br_netfilter 内核模块 | |

echo "br_netfilter" | sudo tee /etc/modules-load.d/k8s.conf | |

# 配置 sysctl 内核参数 | |

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf | |

net.bridge.bridge-nf-call-ip6tables = 1 | |

net.bridge.bridge-nf-call-iptables = 1 | |

net.ipv4.ip_forward = 1 | |

EOF | |

# 应用 sysctl 配置 | |

sudo sysctl --system |

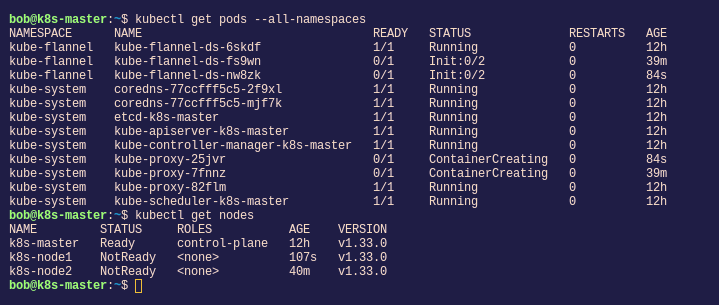

- 节点加入集群后,状态显示

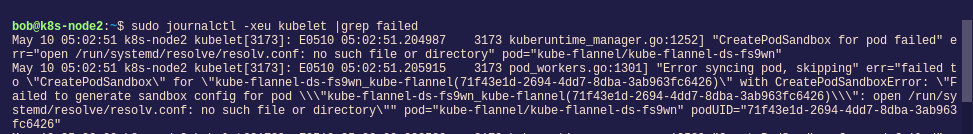

NotReady,相关网络容器无法创建和初始化

在工作节点上执行 sudo journalctl -xeu kubelet |grep failed 查看相关报错信息根据报错进行相对应的处理

# 报错信息 | |

Failed to generate sandbox config for pod ... open /run/systemd/resolve/resolv.conf: no such file or directory |

原因分析:

/run/systemd/resolve/resolv.conf 是由 systemd-resolved 服务生成的 DNS 配置文件路径,这两台工作节点并没有安装 systemd-resolved 服务,所以没有这个配置文件

解决方法:

# 通过 `ps` 查看 `kubelet` 的启动参数 | |

bob@k8s-node2:~$ ps -ef |grep kubelet | |

root 751 1 2 04:17 ? 00:00:17 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --container-runtime-endpoint=unix:///var/run/containerd/containerd.sock --pod-infra-container-image=k8s-registry.keeponline.cn/k8s/pause:3.10 | |

bob 947 816 0 04:31 pts/0 00:00:00 grep kubelet | |

# 修改配置文件中的 resolvConf 路径 | |

bob@k8s-node2:~$ sudo cat /var/lib/kubelet/config.yaml | |

apiVersion: kubelet.config.k8s.io/v1beta1 | |

authentication: | |

anonymous: | |

enabled: false | |

webhook: | |

cacheTTL: 0s | |

enabled: true | |

x509: | |

clientCAFile: /etc/kubernetes/pki/ca.crt | |

authorization: | |

mode: Webhook | |

webhook: | |

cacheAuthorizedTTL: 0s | |

cacheUnauthorizedTTL: 0s | |

cgroupDriver: systemd | |

clusterDNS: | |

- 10.96.0.10 | |

clusterDomain: cluster.local | |

containerRuntimeEndpoint: "" | |

cpuManagerReconcilePeriod: 0s | |

crashLoopBackOff: {} | |

evictionPressureTransitionPeriod: 0s | |

fileCheckFrequency: 0s | |

healthzBindAddress: 127.0.0.1 | |

healthzPort: 10248 | |

httpCheckFrequency: 0s | |

imageMaximumGCAge: 0s | |

imageMinimumGCAge: 0s | |

kind: KubeletConfiguration | |

logging: | |

flushFrequency: 0 | |

options: | |

json: | |

infoBufferSize: "0" | |

text: | |

infoBufferSize: "0" | |

verbosity: 0 | |

memorySwap: {} | |

nodeStatusReportFrequency: 0s | |

nodeStatusUpdateFrequency: 0s | |

resolvConf: /run/systemd/resolve/resolv.conf # 更改为 /etc/resolv.conf | |

rotateCertificates: true | |

runtimeRequestTimeout: 0s | |

shutdownGracePeriod: 0s | |

shutdownGracePeriodCriticalPods: 0s | |

staticPodPath: /etc/kubernetes/manifests | |

streamingConnectionIdleTimeout: 0s | |

syncFrequency: 0s | |

volumeStatsAggPeriod: 0s | |

# 重启 kubelet 服务 | |

bob@k8s-node2:~$ sudo systemctl restart kubelet |

# 可能有用的网站链接

k8s 官方文档:https://kubernetes.io/zh-cn/docs/home

docker 国内可用的加速镜像源:https://github.com/dongyubin/DockerHub

registry.k8s.io 国内可用镜像源:https://docker.aityp.com

Watt Toolkit (Steam 加速器,可访问 github 等网站):https://steampp.net